Introduction

We propose a new paradigm for video generation that combines the flexibility of masked auto-regression (MAR) in a continuous space with the robust generative capabilities of diffusion model (DM). Specifically, we present a scalable training recipe and an efficient neural architecture design for video generation. Our model decomposes video generation into two sub-tasks — temporal and spatial modelling — handled by distinct networks with an asymmetric design based on the following two principles:

-

MAR handles long-range temporal modelling, while DM focuses on detailed spatial modelling.

-

MAR operates with more parameters at a lower resolution, while DM operates with fewer parameters at a higher resolution.

Following this principle, our model integrates MAR-based planning signals with a DiT-based lightweight, tiny diffusion model, hence the name MarDini. Our empirical study on MarDini highlights the following key characteristics:

-

Flexibility. With MAR conditioning, MarDini naturally supports a range of video generation tasks through

flexible masking strategies. For example, when given the first frame and masking the rest, it performs image-

to-video generation; when given a video and masking subsequent frames, it performs video expansion;

and, when given the first and last frames and masking the middle frames, it performs video interpolation.

-

Scalability. MarDini can be trained from scratch at scale, without relying on generative image-based

pre-training. In contrast to most video generation models, that treat video as a secondary task following

image generation, MarDini leverages mask ratio tuning to progressively adjust the difficulty of the training

task. This approach enables the model to scale from video interpolation to full video generation, directly

bypassing the need for image-based pre-training.

-

Efficiency. MarDini's asymmetric design allocates more computational resources to lower resolutions,

making it memory-efficient and fast during inference. With lower overall memory usage, MarDini allows

the deployment of computationally intensive spatio-temporal attention mechanisms at scale, improving its

ability to model complex motion dynamics.

MarDini Training Pipeline Overview. A latent representation is computed for unmasked frames that serve as a conditional signal to a generative process. On the first hand, we have a planning model that autoregressively encodes global conditioning signals from a low-resolution version of the unmasked latent inputs. On the other hand, the planning signals are fed to the diffusion-based generation model through cross-attention layers. A high-resolution version of the input conditions is also ingested by the diffusion model, enabling generation with a coherent temporal structure and a direct mechanism to attend to fine-grained details of the unmasked frames. MarDini is trained end-to-end via masked frame-level diffusion loss.

MarDini Generations

Here, we showcase MarDini's video generation capabilities through diverse masking strategies.

Image-To-Video Results

The primary application of MarDini is image-to-video generation. We demonstrate this capability by using one reference frame placed in the middle position as a conditioning input, and generating 16 additional frames. In the following, we present a wide range of diverse generated videos which contains 17 frames rendered at 8 FPS, producing smooth 2-second videos.

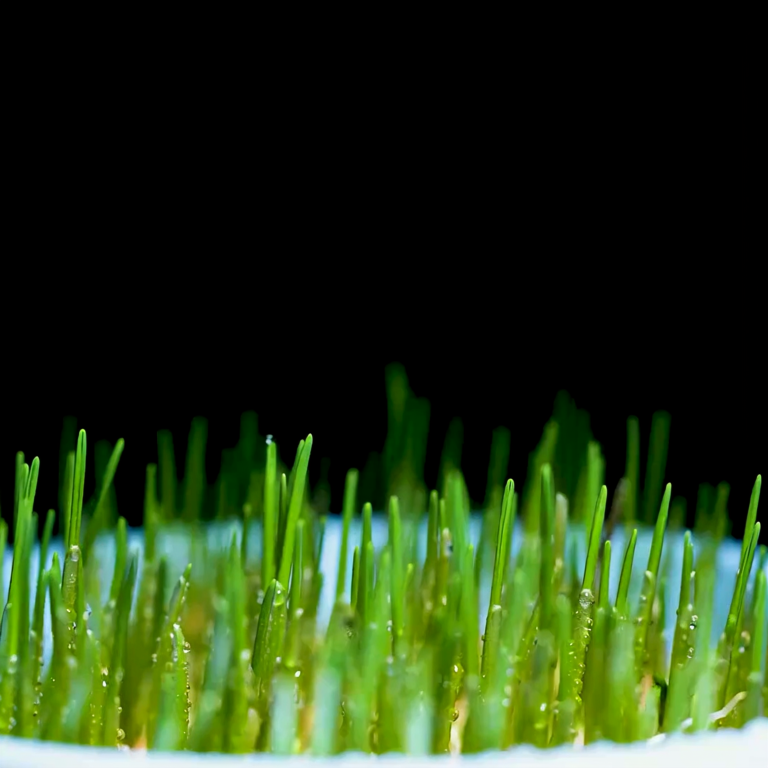

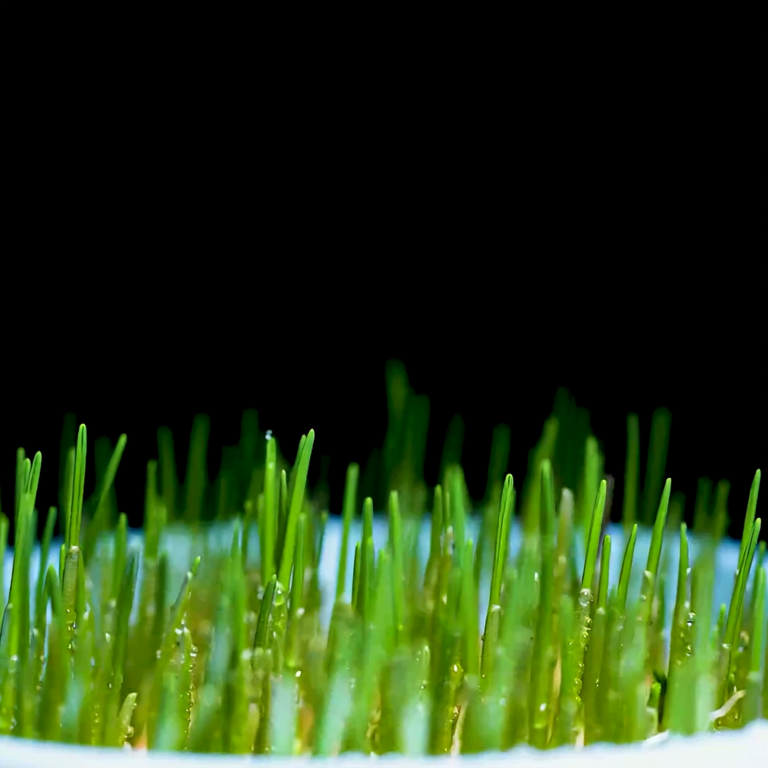

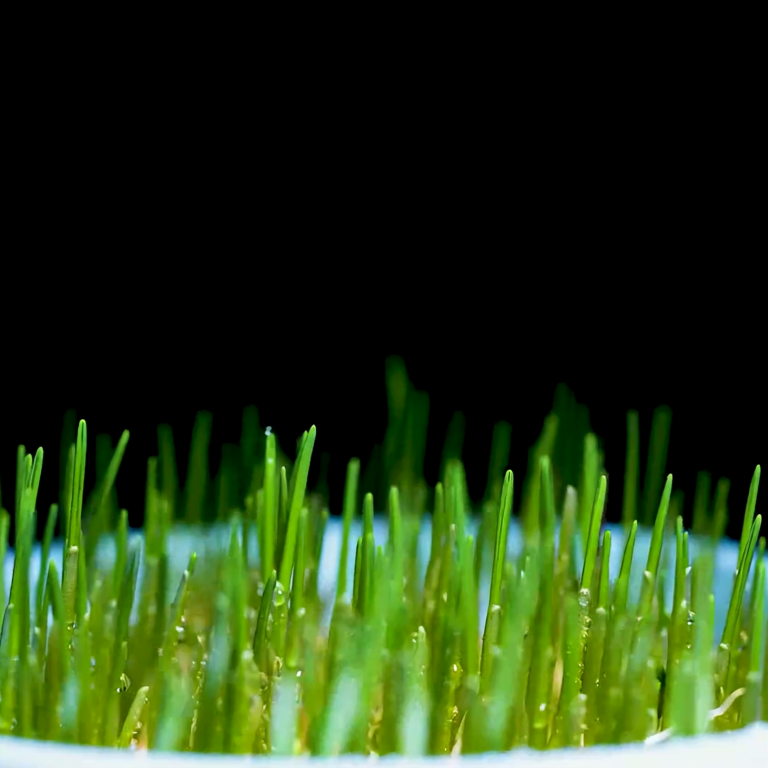

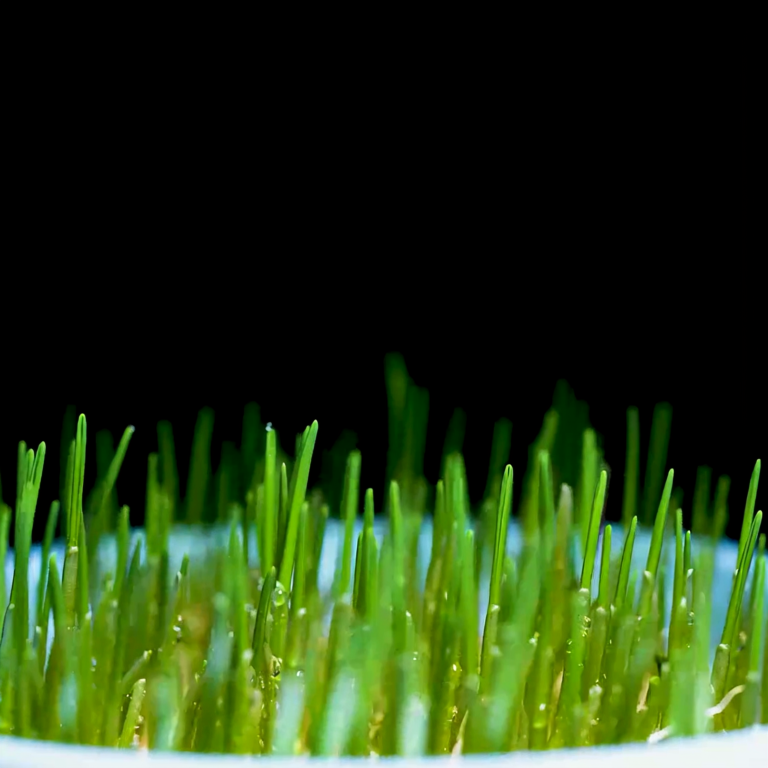

Video Expansion Results

MarDini is also capable of video expansion by conditioning on existing videos of any duration. We demonstrate this by generating 2-second expansions from 5-frame reference videos, adding 12 new frames to each sequence, shown below.

Video Interpolation Results

MarDini achieves video interpolation by generating intermediate frames using the first and last frames as conditioning signals. When these boundary frames are identical, MarDini can create seamless looping videos.

Auto-Regressive Generation for Slow-Motion Videos

By using MAR for high-level planning, MarDini supports auto-regressive inference, generating additional frames beyond those defined in training. We demonstrate this with hierarchical auto-regressive generation: starting with a video, we segment it into multiple clips, expand each clip, and treat the expanded clip as a new video for recursive interpolation. For example, starting with 4 images, MarDini uses a 17-frame window to expand them into a 128-frame slow-motion video (64× expansion). This shows that our model isn't constrained by the training window size, emphasizing its potential for long-range video generation.

Zero Shot 3D View Synthesis

Although trained solely on video data, MarDini shows preliminary spatial understanding, suggesting potential for 3D applications. In the following example, two views of a fixed object serve as the first and last reference frames, while intermediate frames are generated, similar to our video interpolation task. The model effectively produces convincing, 3D-consistent views, showcasing its promise for 3D generation. Notably, no camera motion control signals were used. We plan to explore MarDini's performance on 3D data with better control in future work.

Conclusion

We have introduced a new family of generative models for video, i.e., MarDini, based on auto-regressive diffusion, wherein a large planning model offers powerful conditioning to a much smaller diffusion model.

Our design philosophy considers efficiency from model conception, and so our heaviest model component is only executed once at lower resolution inputs, whereas our generative module focuses on fine-grained details at the frame level, reconciling high-level conditioning and image details.

Our model is unique in that it leverages a masked auto-regressive loss directly at the frame level. MarDini is afforded with multiple generative capabilities from a single model, e.g., long-term video interpolation, video expansion, and image animation.

Citation

To cite the paper, please use the below:

@article{liu2024mardini,

title={MarDini: Masked Autoregressive Diffusion for Video Generation at Scale},

author={Haozhe Liu and Shikun Liu and Zijian Zhou and Mengmeng Xu and Yanping Xie and Xiao Han and Juan C. Pérez and Ding Liu and Kumara Kahatapitiya and Menglin Jia and Jui-Chieh Wu and Sen He and Tao Xiang and Jürgen Schmidhuber and Juan-Manuel Pérez-Rúa},

journal={arXiv preprint arXiv:2410.20280},

year={2024}

}